Generative AI for 3D Worlds: The Ultimate Guide to Building the Metaverse (2025 Edition)

Honestly, teaching an AI is pretty much like tossing a newbie pilot into the sky—except, you wouldn’t actually do that, right? You stick ‘em in one of those wild flight simulators first. Let ‘em crash, burn, and freak out over fake thunderstorms. That’s how they get good. This guide on Generative AI for 3D Worlds shows how AI gets its own version of that flight sim.

Synthetic data might sound complex, but it’s already shaping how AI actually learns—safely and ethically. Instead of scraping together expensive and privacy-nightmare real-world data, tech folks now just whip up their own. This trick is what’s unblocking a ton of progress in AI right now. This isn’t just another dry technical walkthrough; we’re about to rip open this tech and break it down without all the jargon.

Estimated reading time: 11 min.

From Pixels to Places: The Evolution Behind Generative AI for 3D Worlds

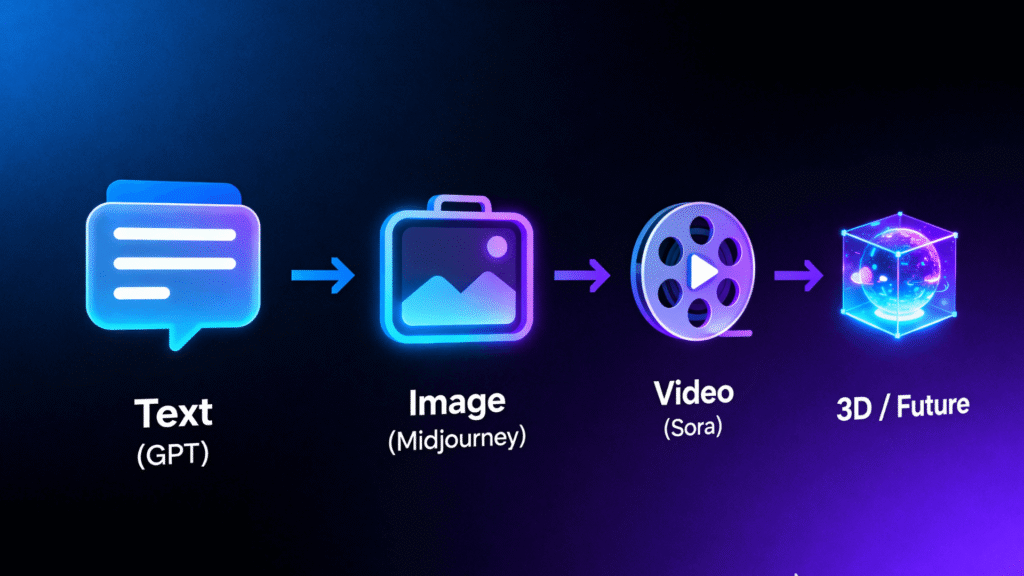

Let’s back up for a sec. If you wanna get why 3D world generation is such a big deal, you gotta look at how generative AI crawled out of the digital swamp. It started out simple, think text, then images, then video. Each step has basically been a massive leap, and now, bam, we’re at the point where AI can build entire places you can step into. Wild times.

First came text generators like GPT. Then came image generators like Midjourney. So, lately, video generators like OpenAI’s Sora have been throwing out these wild, actually-watchable video clips. You can see where this is all heading—every year, this tech chases more layers, more depth, more “oh wow, that’s actually real” moments.

But jumping from 2D or video to full-on 3D? That’s where things get truly bonkers. A 3D world is a whole other beast. You’ve gotta make sure objects actually “live” in space and follow the rules of physics. It’s like the gap between doodling a car on a napkin and actually building a working Hot Wheels track. Getting from pretty pictures to living, breathing worlds is the core challenge for Generative AI for 3D Worlds right now.

Now that you understand the progression, let’s explore how this new technology actually works.

How Generative AI for 3D Worlds Actually Works

Alright, so, how does an AI just whip up a whole world outta thin air? It’s sorta wild, honestly. You’ve got a bunch of fancy tech all jamming together—the engine of Generative AI for 3D Worlds.

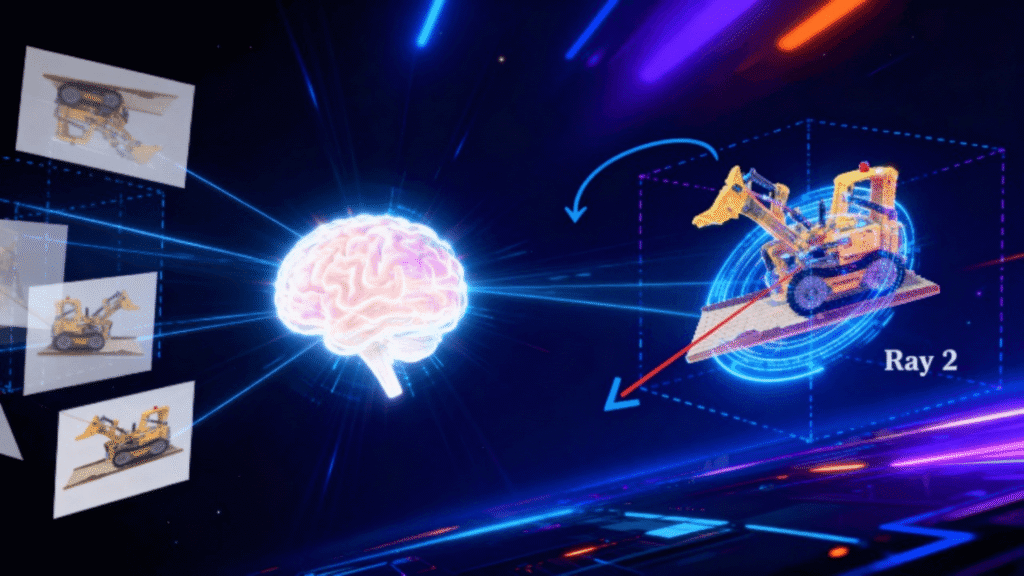

NeRFs (Neural Radiance Fields): The 3D Photographer

Let’s talk NeRFs. These things are kinda the MVPs here. Imagine you hand a NeRF a handful of regular ol’ 2D snapshots from a few angles. The NeRF’s like, “Cool, got it,” and then somehow, in its weird brain, it builds this chunky 3D version of what it saw, including depth, shapes, and how light bounces around. Once it’s trained, it can create a new, photorealistic shot from any angle. It essentially mastered 3D photography without ever picking up a camera. Kinda freaky, right? (Source: UC Berkeley, “NeRF” Project Page)

💡 Authoritative Source: The Original NeRF Paper

The concept of NeRFs (Neural Radiance Fields), a fundamental technology for generating 3D worlds, was introduced in a 2020 paper by researchers from UC Berkeley and Google. This paper is the foundation for much of the technology we see today.

Diffusion Models: The Creative Sculptor

Ok, here is the magic behind every last one of those trippy AI images you’ve seen. They begin with pure chaos — a jumble of 3D dots that makes absolutely no sense — and then, nudged along by a text prompt, they start to mold it into something that actually does. You are watching digital static congeal into a haunted castle or a neon jungle simply because you wanted it to.

World Models: The “Brain” of Physics

Now if you want your 3D world to actually do stuff, then a pretty picture will not suffice. Enter the world model. Sort of like the A.I.’s cheat sheet for how things are intended to work. It’s a place where things slide and bounce and break according to the laws of physics you’d expect. No world model? You’re left with a fancy diorama. With it? You have a living, breathing universe.

So yeah, that’s the basic idea. Now, let’s get to the cool stuff.

Science Fiction No More: Mind-Blowing Demos of Generative AI for 3D Worlds

This isn’t some pipe dream, by the way. People are already cranking out insane demos that’ll melt your brain.

Google’s “Genie”: The 2D World Generator

Check this out: Early 2024, Google DeepMind drops “Genie.” It can take a single image—like, literally one photo or even your scribbly doodle—and boom, it spits out a fully playable 2D platform game that looks and feels like that image. Genie figures out the physics and controls just by binge-watching thousands of hours of gameplay videos online. It’s inventing whole game worlds and the rules that make them tick. That’s some next-level stuff. (Source: Google DeepMind, “Genie”)

“`NVIDIA’s Research and the Metaverse Connection

NVIDIA’s all in on this stuff. Their team is dropping jaw-dropping demos where AI just spits out massive, insanely realistic 3D cities, like it’s nothing. They use those virtual worlds to train self-driving cars, basically turning them into digital playgrounds for robots. They’ve slapped the label “Industrial Metaverse” on it. This is the poster child for how Generative AI for 3D Worlds is shaking up big business.

🌍 Explore a 3D Object Generated by AI

Experience below how generative AI can create 3D objects and environments. This type of technology is used in tools like NVIDIA Omniverse and Blockade Labs to generate realistic digital worlds from simple text descriptions.

🧩 Rotate, zoom, and explore — imagine entire worlds being created by AI just like this.

Why This Changes Everything: How Generative AI for 3D Worlds Will Be Used

| Area | Transformation | Example |

|---|---|---|

| Gaming | Procedural infinite worlds | Personalized game maps |

| Industry | Digital twins for factories | NVIDIA Omniverse |

| AI Training | Synthetic simulations | Self-driving car datasets |

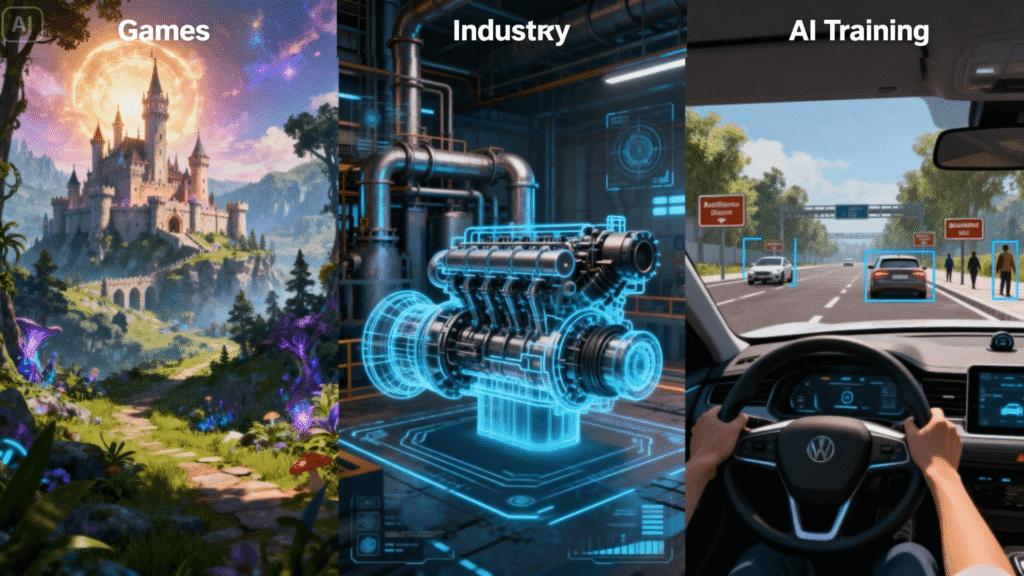

Whoa, buckle up—gaming’s about to get wild. Forget those days when devs spent ages painstakingly crafting one lonely map. Now? AI’s just churning out endless worlds on the fly, and every player basically gets their own universe. All that’s toast.

And it’s not just gamers cashing in. Businesses are about to get their own digital playgrounds, too. Picture companies running dry runs of their factories in a virtual space before coughing up real cash. That’s the heart of this “industrial metaverse” talk. Stuff like NVIDIA’s Omniverse isn’t just hype—it’s laying down the first blocks, using open standards like OpenUSD so everyone can plug in. (Source: NVIDIA Omniverse)

Next-Level Simulators for AI Training: This is arguably the most important application. The ability to instantly generate millions of realistic simulation environments is the key to safely training the next generation of AI. This is how we will train self-driving cars on infinite “edge cases,” teach humanoid robots to navigate complex spaces, and allow autonomous AI agents to practice their tasks. The future of training is powered by Generative AI for 3D Worlds.

Now that we’ve seen the potential, let’s look at the challenges.

The Hurdles Ahead: Challenges for Generative AI for 3D Worlds

Computational Cost: Generating these complex 3D worlds requires an immense amount of power. This reinforces the importance of the race to build the next generation of AI chips.

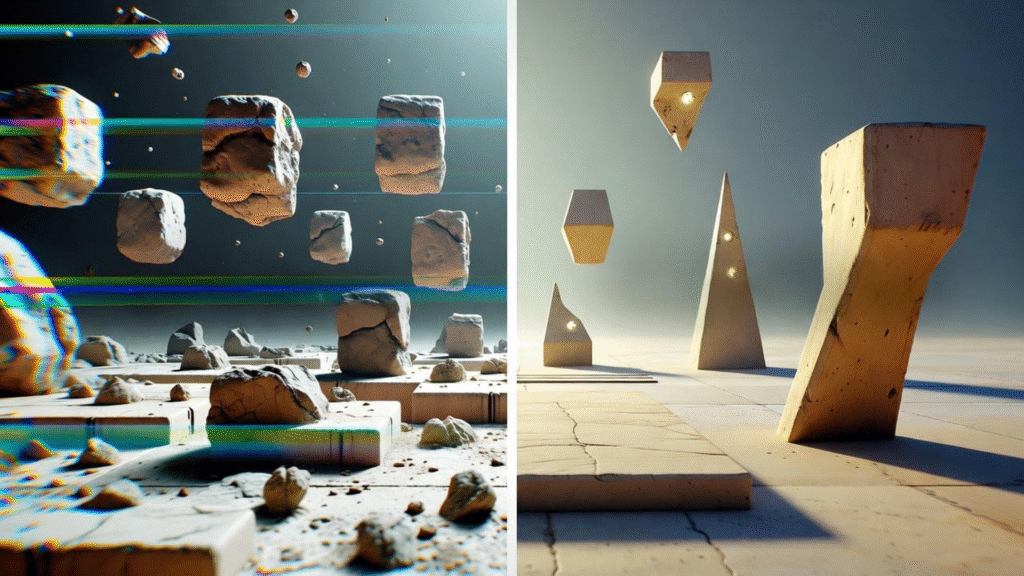

Consistency and Control: A major challenge is maintaining the “logic” and “physics” of a generated world. Another hurdle is giving human creators fine-grained control over the AI’s output.

The Risk of “3D Slop”: Just as we’ve seen an explosion of low-quality AI-generated images (“AI slop”), there’s a risk of the internet being flooded with buggy, low-quality 3D worlds. This makes the challenge for Generative AI for 3D Worlds one of quality control.

Conclusion: We’re Not Just Generating Content, We’re Generating Realities

The journey of Generative AI for 3D Worlds is the culmination of everything that has come before. It merges the creative power of diffusion models with the spatial understanding of NeRFs and the logical reasoning of world models. This isn’t just another incremental upgrade—this is AIs leveling up from narrators to straight-up world-builders. Kinda wild, right?

We are, in essence, inching closer to a future where you can no longer tell the difference between what is digital and what is “real.” This is the stuff that’s going to power tomorrow’s games and those trippy metaverses. Getting your head around Generative AI for 3D Worlds is like getting a teaser for the next season of the AI saga. And hey, synthetic data isn’t here to erase reality, it’s just making the playground so much bigger.

🧠 Ready to Build Your Own AI-Generated World?

Experiment with real tools like Skybox AI or NVIDIA Omniverse and start creating your own 3D environments.

Written by Gisely Noronha, technology writer focused on Artificial Intelligence and data ethics.

Sources: Google DeepMind, UC Berkeley, NVIDIA, MIT CSAIL.

So how is this different from what Meta (Facebook) was trying to do?

The original vision of Meta was all about social VR. The current wave of Generative AI for 3D Worlds is more about generating realistic and useful environments for industry ("digital twins") & next-generation gaming.

Is this tech gonna make 3D artists extinct?

Nope, not buying it. It’s more of a remix than a funeral, you know? Sure, some of the boring grunt work gets handed off to the software, but the real magic? That’s still on the artist. You’re still in the driver’s seat, picking the colors, setting the vibe, making sure it doesn’t end up looking like a robot’s fever dream. Artists aren’t getting replaced, they’re just getting some killer new toys.

And about those generated worlds, man, they’re getting wild. The progress is nuts. Some of the latest NVIDIA demos?

Blink twice and you’ll swear you’re looking at actual footage. It’s not perfect everywhere (yet), but wow, it’s getting harder and harder to tell what’s real and what’s computer magic.

So, what’s the deal with a World Model?

Basically, it’s like the AI’s personal head-canon for how reality ticks. Imagine it as a squished, cliff-notes version of the universe living inside a neural network’s brain. It takes all the if this, then that stuff and crams it into something the AI can use to guess what’s coming next. Spooky, right?

Now, does this mean you need NASA’s computer room just to run it?

Not exactly. Sure, getting these things up and running, like, training the model and teaching it the ropes, that eats up a ton of computer juice. We’re talking server farms, not your average laptop. But once the heavy lifting’s done and the 3D world’s loaded up, you can usually run it on a beefy gaming PC or even a solid VR headset. So, nope, you don’t need to rob a data center. Just don’t try it on your grandma’s old Dell.