The Future of AI Chips: Your Ultimate Guide to the Post-Silicon Era

Alright, let’s get real for a second—today’s AI? It’s basically a Formula 1 engine revving at max, but we’re shoving it inside your mom’s minivan. And yeah, that “minivan” is the classic computer chip. It does the job, but it’s like running a marathon in flip-flops: hot, clunky, and you’re definitely not breaking any speed records. The future of AI chips needs a whole new design.

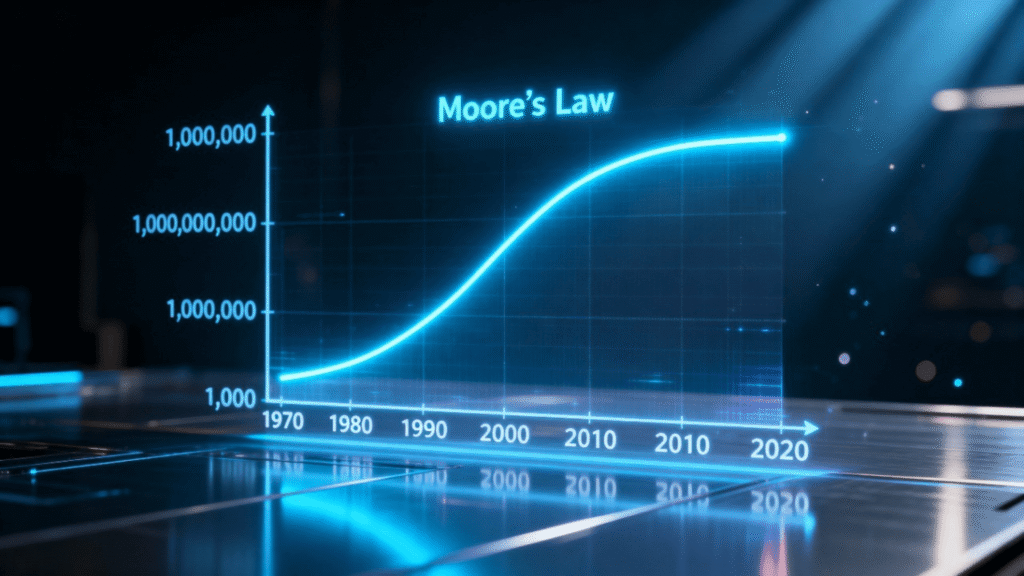

For fifty years, the tech world’s been bowing down to Moore’s Law, which basically said we’ll just keep cramming double the transistors onto chips every couple years. That’s how we got from Tamagotchis to PlayStations. But here’s the kicker: physics doesn’t give a damn about our deadlines. We’ve hit walls. Data centers are guzzling electricity, and making chips any tinier isn’t really happening anymore.

So, what’s the play now? If we want AI to stop acting like a clumsy toddler, we need hardware that can actually learn. That’s why everyone’s freaking out and throwing money at the next big breakthrough. Neuromorphic computing? Chiplets? Yep, every insane idea you’ve heard is on the table. This guide will break down why your laptop’s processor is a potato for AI, how brain-inspired chips are changing the game, and who’s winning this all-out chip war.

The End of an Era: Why Traditional Chips Are Hitting a Wall

Alright, listen up: everything you obsess over—your phone, your laptop, that pretentious “smart” fridge—runs on sand. No, really. Silicon. Those tiny transistors? Picture an army of microscopic goblins, flipping switches like they’re in a rave. “1” is on, “0” is off. For fifty years, Moore’s Law was everybody’s comfort blanket, but lately, it’s sputtering out.

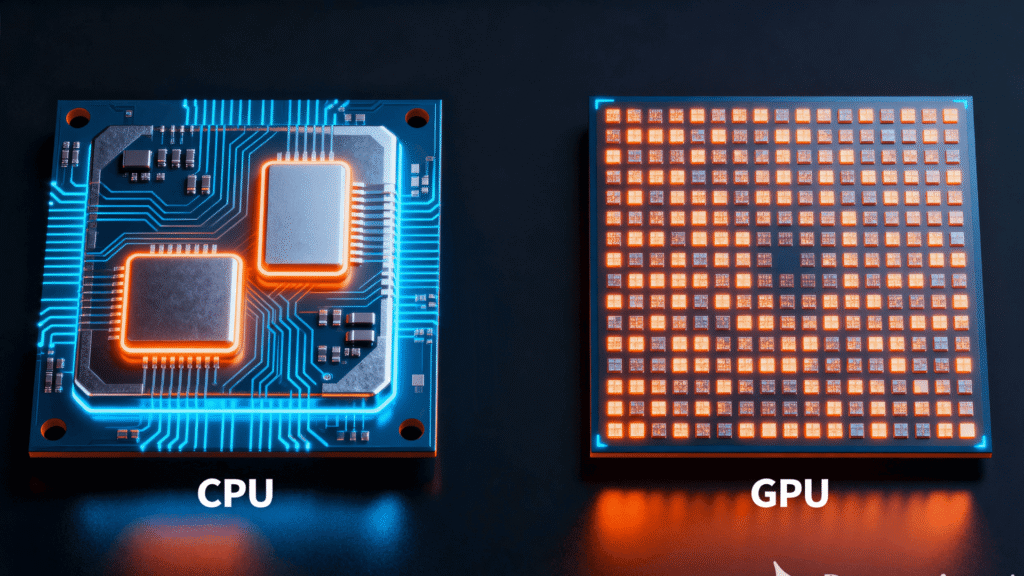

Physics isn’t just slowing us down; it’s flipping the table. Transistors have shrunk so much they’re playing hide-and-seek with atoms. Get that small, and quantum weirdness takes over. Electrons just Houdini straight through barriers. Then there’s the ancient architecture problem, aka the Von Neumann bottleneck, a critical concept for understanding the future of AI chips. Your CPU (the brainy bit) and your memory (where data chills) are separate. It’s like sending a chef sprinting to a pantry down the hall for every single spice. For AI, it’s a logistical nightmare. (Source: IBM Research)

The GPU Revolution: How NVIDIA Cornered the AI Market

So, here’s where things get spicy. The first real breakthrough didn’t come from some wild new tech. Nope—it was about reusing something we already had: the humble GPU (Graphics Processing Unit).

If you need an analogy, CPUs are like master chefs—a few smart cores doing complex tasks one by one. A GPU? Picture five thousand short-order cooks all slapping together a grilled cheese at the exact same time. That’s parallel processing. Turns out, training neural networks is basically smashing together gigantic spreadsheets of numbers, a perfectly parallel task. In the early 2010s, AI nerds figured out that gaming GPUs could demolish these math chores.

NVIDIA totally ran with this. They whipped up CUDA—basically a cheat code for devs. Suddenly, their GPUs weren’t just for playing Fortnite; they became the heartbeat of the AI revolution, turning the company into tech-world royalty. (Source: NVIDIA)

Beyond GPUs: The Rise of Specialized AI Accelerators

While GPUs are great, they’re still an adaptation. The next frontier is hardware designed for one purpose: running AI. Google, for example, cooked up its own chip: the Tensor Processing Unit (TPU). It’s an ASIC—a chip built to do one job stupidly fast. All the usual features? Gone. The TPU is a lean, mean, math machine for neural networks, leaving even beefy GPUs eating its dust.

Chiplets: The “Lego” Revolution in Chip Design

The new hotness in the future of AI chips is “chiplets.” Picture this: silicon Lego for nerds. Gone are the days of one monster chip running the show. Now it’s all these mini-chips—one for graphics, one for brains, maybe a tiny AI gremlin. You smash ‘em together however you want and boom, you’ve built your own Franken-chip. It’s cheaper, more flexible, and everyone from AMD to Intel is jumping on this train.

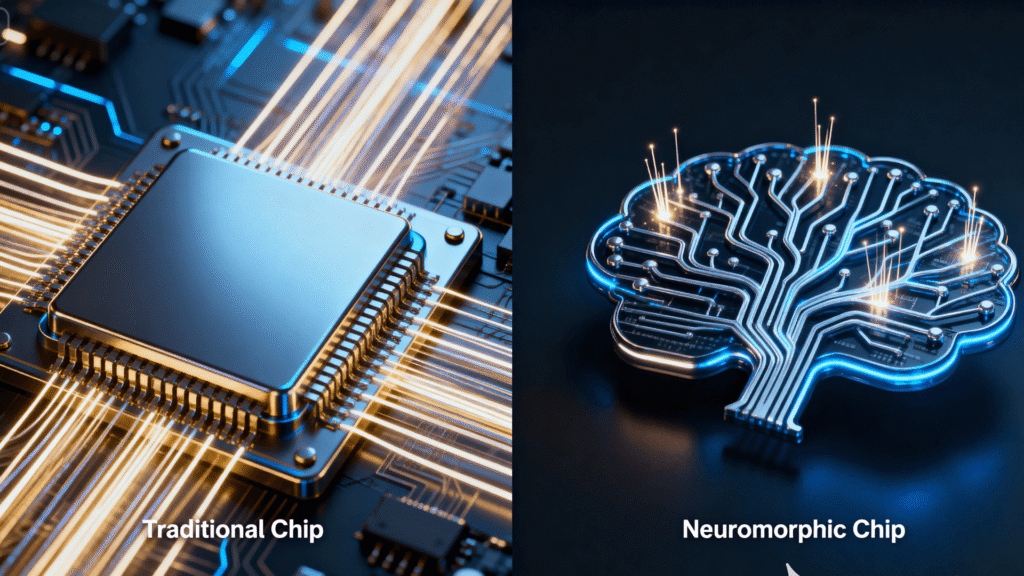

Neuromorphic Computing: Building a Chip That Thinks Like a Brain

This is the most radical leap. While GPUs and TPUs get better at the math, neuromorphic computing aims to replicate the brain’s architecture. These chips ditch the old rules. The hardware has fake “neurons” and “synapses” doing both the thinking and remembering in one spot. No more data traffic jams. Way lower power bills, way less waiting around.

These chips use “spiking neural networks.” The neurons are basically sleeping on the job until something interesting pops up, then they fire a quick zap. It’s like that one friend who only speaks up when there’s something real to say. Intel’s Loihi 2 chip, a leader in this area, pulled off some next-level AI magic using up to a thousand times less juice than a regular processor. The big brains at Intel and IBM are still in their science fair phase, but the stuff they’ve shown off is nuts. (Source: Intel Labs)

Fonte: WebsEdgeScience

The Post-Silicon Era: A Glimpse into the Future of AI Chips

So, yeah, silicon’s still king for now, but there’s a whole crew of researchers itching to ditch it. Enter optical computing (photonics), using pure light instead of electrons. Imagine your laptop never getting hot—yeah, I want that future, too. And then there’s the ultimate form of brain-inspired computing: Biological Computing. As we explored in our guide to Organoid Intelligence, this field abandons silicon entirely in favor of “wetware.”

Conclusion: A New Golden Age for Computer Architecture

The slowdown of Moore’s Law isn’t the end; it’s the start of a new golden age of creativity. We are moving from the era of the general-purpose CPU to an era of specialized computing. The battle for the future of AI chips will be won on the factory floor of semiconductor fabs and in the research labs designing the fundamental hardware that makes intelligence possible. These chips are the foundation upon which the next generation of Artificial General Intelligence will be built.

What’s up with “7nm” or “3nm” chips?

Right, so “nm” stands for nanometer, a fancy way of saying “the tiny stuff inside your chip.” A smaller number meant more power, but lately, we’re running up against the laws of physics, so tiny doesn’t always mean better.

Will neuromorphic chips replace CPUs and GPUs?

Honestly? Not a chance. It’s like asking if a chef’s knife is gonna replace a blender. Future computers are shaping up to be a tag team: CPUs for everyday stuff, GPUs for heavy-duty math, and neuromorphic chips for lightning-fast, super-efficient AI tasks.

Why is there a "chip war" between the U.S. and China?

Because chips are the new oil. Try running a phone, a missile, or even a fridge without them—good luck. The U.S. and its allies are the heavyweights in making the most advanced chips, and China wants in. Whoever controls the chips, controls the future.

What is an ASIC?

ASICs are the anti-jack-of-all-trades. They’re built for one thing, and they crush it. Google’s TPU is an ASIC for AI. They’re stupid fast at what they’re made for, but have zero flexibility.

How do chiplets actually connect to each other?

They're like tiny Lego blocks for computers. Instead of a big motherboard, engineers stick a bunch of smaller “chiplets” super close together on a thing called an interposer. That way, they can chat with each other at ridiculous speeds, almost like they’re one giant chip.

Want to Stay Ahead of the Curve?

Our newsletter is coming soon. In the meantime, continue your journey into the future of technology.

» Explore Our Guide on OpenUSD, the Tech Building the 3D Internet «