Neural Networks Explained: The Ultimate Beginner’s Guide to How AI Thinks

How do you spot a cat? Come on, it’s not like your brain pulls out a clipboard and starts ticking boxes. You just see it and bam, you’re like, “Yep, that’s a cat.” That’s your brain doing its weird, magical pattern-spotting thing. Now imagine: what if we could build a computer with that kind of superpower? That’s the big, mind-blowing idea behind Artificial Neural Networks, or ANNs if you wanna sound cool at parties. This guide on neural networks explained is your entry into how AI “thinks.”

These things are basically the backbone—the real engine—behind all the flashy AI you keep bumping into. Your phone’s face unlock? ANN. Netflix knowing your weird taste in documentaries? ANN again. Everyone’s obsessed with dropping “neural network” in tech chatter these days, like it’s some kind of digital voodoo. For many, “neural network” might as well mean “the computer did some wizardry, don’t ask questions.”

This guide is here to demystify it all. No heavy jargon, no soul-crushing mathematics. We will break down what a neural network is, how its basic components work, and—most importantly—how it actually “learns.” By the end, you’ll understand why it represents the single most important breakthrough in the history of Artificial Intelligence.

The Blueprint: How the Brain Inspired the First Neural Networks Explained

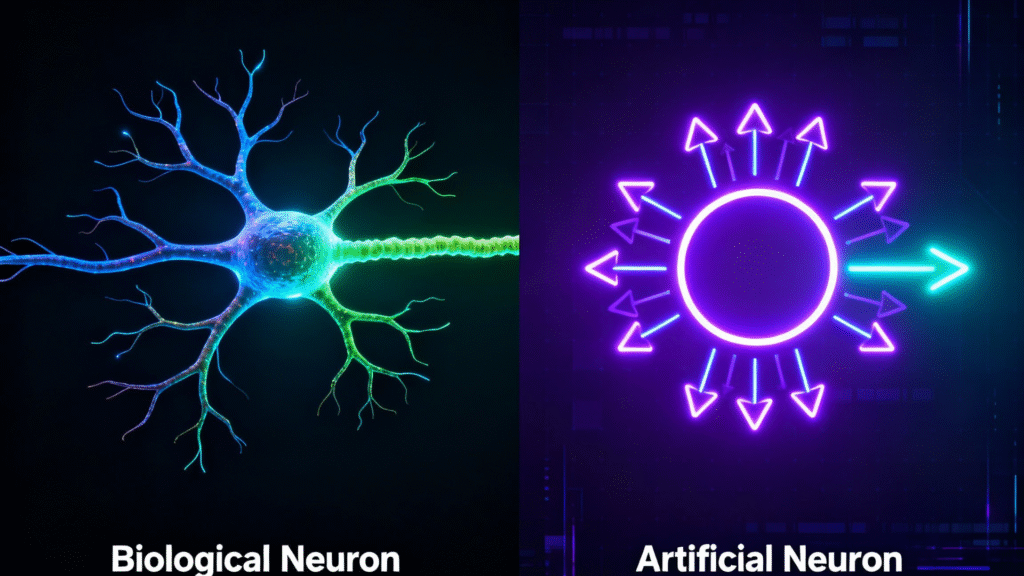

Alright, let’s get real for a sec. The whole idea for neural networks basically rips off our own noggin—specifically, the humble neuron. The brain’s packing, like, 86 billion of these little cells. A neuron’s like a tiny switchboard: it sucks up signals, mixes ‘em all together, and if those signals are spicy enough—boom, it fires off its own signal down the line.

Here’s a fun way to picture it: think of a room full of people gossiping. Every person’s a neuron. They’re soaking up rumors (inputs), deciding how juicy each tidbit is (the “weight”), and if enough drama piles up, they blurt it out to their own crew (output). The real “aha!” moment for AI nerds? We just had to mimic this threshold-based yes/no thing. Enter the “perceptron”—the OG artificial neuron, cooked up by Frank Rosenblatt back in the ‘50s. That’s it. Super basic. And somehow, that’s the seed that grew into the whole AI jungle. (Source: Cornell Aeronautical Laboratory)

The Building Blocks of a Neural Network: Neurons, Layers, and Weights

Fast-forward to today and neural networks are like a souped-up Rube Goldberg machine made of perceptrons. If you peel back the jargon, it’s basically a bunch of these fake neurons stacked into layers—a kind of brainy assembly line.

The Layers: Where the Magic Actually Happens

First up, the Input Layer. Picture this as the network’s front door. It just sits there, waiting for you to throw in your data, like the pixels of a cat photo. The Hidden Layers are like the factory workers behind the scenes. The more hidden layers you have, the “deeper” your network gets (that’s where “deep learning” comes from). The first layer might spot edges, the next spots whiskers, and so on. Finally, the Output Layer is where the network spits out its final answer: “cat” or “not cat.”

Weights & Biases: The Dials and Levers

Now, this is where stuff gets spicy. Every single connection between these neurons has a **weight**. Think of it as the volume knob for that signal. Training a network is just fiddling with those knobs until the music sounds right. Then there’s **bias**, which is basically a nudge for each neuron, helping it decide if it should even bother firing up. All these neurons, glued together by their weights and biases, end up as this wild, adaptable system that somehow learns.

How Do Neural Networks Learn? (The “Learning” Bit)

Honestly, an untrained neural network is about as useful as a goldfish doing calculus. Training is where it gets its act together. The whole thing is a constant loop of “Guess. Check. Panic. Fix.” Picture this: you’re picking up darts for the first time.

- The Wild Guess (Feedforward): You grab a dart and just chuck it. That’s “feedforward.” The network gets a cat photo and guesses, “Eh, 20% chance that blob is a cat?”

- Measuring the Miss (Loss Function): You check how far off the bullseye you were. That’s your “error.” The network uses a “loss function” to spit out a number that basically screams, “Here’s how bad you messed up.”

- Fixing Your Aim (Backpropagation): Here’s where things get juicy. You think back on your throw and adjust your grip. That’s “backpropagation.” The network checks which parts blew it and nudges all its internal weights to do a little better next round.

Fonte: @dansmarttutorials

Repeat that cycle a million times, and suddenly, it’s nailing the bullseye every time, finding cats in your grandma’s vacation pics without breaking a sweat. Kinda nuts, honestly. (Source: Stanford University CS231n Course Notes)

A Quick Tour of the Neural Network Zoo

Now, here comes the plot twist: not all neural networks are cut from the same cloth. In our guide on neural networks explained, we’ll cover the big three, but in reality, there’s a whole zoo of these things out there.

- Convolutional Neural Networks (CNNs): The Image Detectives. These guys are the real MVPs for pictures and videos. If you’ve ever wondered how an AI can whip up a trippy painting or figure out what’s in your selfie, CNNs are pulling the strings.

- Recurrent Neural Networks (RNNs): The Memory Champs. Anytime you’re dealing with stuff where order actually matters—like lyrics or sentences—RNNs step up. Their secret weapon? A little “memory loop” that lets them remember what came before.

- Transformers: The Rockstars of Modern AI. Google dropped the “Attention Is All You Need” paper in 2017 and flipped the whole game upside-down. Models like ChatGPT are built on this. The magic trick is “self-attention,” which lets them look at a whole sentence at once and decide which words matter most. Total game-changers. (Source: Google AI)

Neural Networks in the Wild: Examples You Use Daily

Neural networks are everywhere, lurking behind the scenes like some kind of digital wizard. Your phone? Yeah, it’s got your face memorized thanks to a CNN. And those creepy-accurate recommendations from Netflix or Spotify? Not psychic. Just a neural network stalking your taste. Even self-driving cars are out there juggling a bunch of neural networks at once—spotting stop signs, dodging pedestrians, and trying not to confuse a tumbleweed for a small child. And in hospitals, these networks are starting to eyeball X-rays, sometimes even catching stuff doctors might miss.

👉 Want to See a Neural Network Think?

Click the button below to play with TensorFlow Playground, a live, interactive neural network right in your browser. It’s the best way to get a feel for how they work.

The Limits and the Future: What’s Next for Neural Networks?

Now, here’s where things get a little shady. Neural networks have some real trust issues. There’s this whole “black box” mess—like, it’s making decisions, but ask why, and you’ll get a shrug. Nobody really knows what’s going on inside. So, what’s next? The future’s looking like some wild team-up of neural networks with new kinds of computing. Concepts like Artificial General Intelligence (AGI) and even the biological computing of Organoid Intelligence are based on evolving these principles. Furthermore, the immense computational power required is a bottleneck that could be unlocked by Quantum Computing.

Conclusion: The “Brain” That Changed Everything

Man, the leap from a single dinky mathematical “neuron” to the monster neural nets running today’s AI? Wild ride. And, look, neural networks aren’t some Harry Potter magic. As we’ve shown in this guide on neural networks explained, they’re just clever math tricks that happen to learn stuff if you feed ’em enough data. Honestly, these things are the real MVPs powering the tech revolution. Knowing the basics isn’t just for code wizards anymore—it’s like, future-proofing your brain.

Need a NASA-grade supercomputer to use neural networks?

Nope. Sure, if you wanna train a beast like GPT-4, you’ll need some serious hardware. But, honestly? You use neural nets every day on your phone. Face unlock? Neural net. Instagram filters? Neural net. They scale up or down, depending on the job.

Wait, can a neural network get, like, self-aware?

Haha, nah. That’s sci-fi territory—for now, anyway. Today’s neural networks are just fancy math machines. They crunch numbers, hunt for patterns, but they’ve got zero inner life. No secret dreams of world domination (yet).

So what’s the deal with “deep learning”?

Basically, it’s just stacking a ton of layers in a neural network—like a really tall sandwich. The more layers, the fancier the stuff it can figure out, like faces in photos or weird slang in tweets. That’s why everyone’s hyped about it.

How much data does a neural net need to get smart?

A lot. Like, sickening amounts. If you want it to tell a cat from a dog, you can’t just show it three cat pics and call it a day. Nah, you’re talking thousands, sometimes millions of images. Basically, more data = smarter network.

Are neural networks the only game in town for AI?

Nope. Before neural networks swaggered in and took over, other methods like Decision Trees were a big deal. Sure, deep learning’s the cool kid for the big, gnarly problems, but the old-school methods are still totally legit, especially when you don’t have a mountain of data lying around.

Want to Stay Ahead of the Curve?

Our newsletter is coming soon. In the meantime, continue your journey into the future of technology.

» Explore Our Guide on OpenUSD, the Tech Building the 3D Internet «